Page 19 - 2025S

P. 19

12 UEC Int’l Mini-Conference No.54

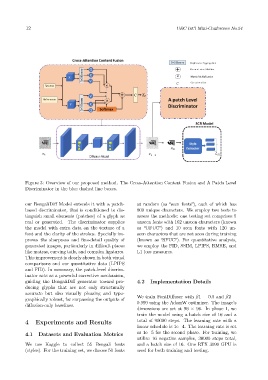

Figure 3: Overview of our proposed method. The Cross-Attention Content Fusion and A Patch Level

Discriminator in the blue dashed line boxes.

our BengaliDiff Model extends it with a patch- at random (as “seen fonts”), each of which has

based discriminator, that is conditioned to dis- 800 unique characters. We employ two tests to

tinguish small elements (patches) of a glyph as assess the methods: one testing set comprises 5

real or generated. The discriminator supplies unseen fonts with 162 unseen characters (known

the model with extra data on the texture of a as “UFUC”) and 10 seen fonts with 120 un-

font and the clarity of the strokes. Specially im- seen characters that are not seen during training

proves the sharpness and fine-detail quality of (known as “SFUC”). For quantitative analysis,

generated images, particularly in difficult places we employ the FID, SSIM, LPIPS, RMSE, and

like matras, curving tails, and complex ligatures. L1 loss measures.

This improvement is clearly shown in both visual

comparisons and our quantitative data (LPIPS

and FID). In summary, the patch-level discrim-

inator acts as a powerful corrective mechanism,

guiding the BengaliDiff generator toward pro- 4.2 Implementation Details

ducing glyphs that are not only structurally

accurate but also visually pleasing and typo-

graphically robust, far surpassing the outputs of We train FontDiffuser with β1 = 0.9 and β2 =

diffusion-only baselines. 0.999 using the AdamW optimizer. The image’s

dimensions are set at 96 × 96. In phase 1, we

train the model using a batch size of 16 and a

total of 90000 steps. The learning rate with a

4 Experiments and Results

linear schedule is 1e−4. The learning rate is set

as 1e−5 for the second phase. For training, we

4.1 Datasets and Evaluation Metrics

utilize 16 negative samples, 30000 steps total,

We use Kaggle to collect 55 Bengali fonts and a batch size of 16. One RTX 3090 GPU is

(styles). For the training set, we choose 50 fonts used for both training and testing.